When I started Market Prescience the aim was to create a market research and analyst firm that put hard data and underpinning content at its core. To really offer the depth and expertise, I decided to learn more about developing data analytics and machine learning tools.

Back to school, back to reality

I had always kept my programming skills from completely atrophying, but my C++, visual BASIC, SQL and a smattering of 8088 assembler didn’t really seem like the right tools (although the SQL has been very handy). I had been using Python and R a little bit over the past 10 years, I used Python to build a tool to find clusters in survey data for example. So, I doubled down on the Python I already knew, reinforced the basics and got myself to the point I was able to speedily pass problems on LeetCode. I wrote a neat app to find all the charts in an Excel spreadsheet and transfer them to PowerPoint as native PowerPoint – so when you send someone the PowerPoint you don’t need to mess around with linked Excel files. I also wrote a couple of apps to help with my daughter’s home schooling to test her phonics and geography!

The great thing about an analyst using technology to build a business is that concepts that had become throw-away comments from my analyst days, like technical debt, became real very quickly. Although I did do some commercial coding at the start of my career, I’d forgotten the reason why you code smart, why you make functions replicable and develop code so its base is as universal as possible. I’d been talking about the applications management space for 20 years, but getting close, even in this limited way, made the whole thing more real for me. This injection of reality at this point in my career is really useful, particularly as I’d been 20+ years from the coal face of IT.

Writing snippets of code is all very nice, but what next?

Now to learn machine learning. Gulp. What I would say is I picked a good time to start. Five years ago there were no good tools for someone lacking mastery of high level mathematics and statistics at their fingertips. Now you can achieve in 5-10 lines of Python running on the Google Collaboratory what would have take 200-500 lines of code 5 years ago. It’s good that my academic preparation was OK if a bit rusty, so I could at least follow how a neural network back propagation works even if I can’t recite it and build first principles code to implement it. Well not without 10 books, the papers with code website and referring to Stack Overflow every 10 mins. The beauty of modern tools like scikit-learn, PyTorch and TensorFlow is you don’t have to know how to do this.

So, I did a couple of stiff learner courses on machine learning and deep learning with TensorFlow. I also bolstered my Python with a better understanding of data analytics tool Panadas and a refresher in SQL – which is essential if you are going to do anything beyond experimentation. I already used Tableau, but I also spent time learning about getting more out of it.

To practise these skills, I’ve built and optimized the de rigour food analysis application which identifies the food you are eating from a picture, I’ve built an app that estimates house prices using historic data and learned how to build a spam filter. These projects are the machine learning training wheels, where you see how computer vision, regression and text analysis work and principals of optimising these types of models from data.

Abstraction drives AI Adoption

From Artificial Intelligence to Machine Learning, to automation and even to quantum computing, abstraction is causing the biggest shifts in the way people interact with technology and consequently the amount of help and level of human delivered services they need.

As we have experienced over the last decade or so, the IT services industry has been the adjusting to the impacts of this abstraction with custom, mega deals giving way to smaller configurable engagements as using the underlying technology simplified. Successful firms learning how to automate and scale down quickly. Driven largely by shifts from traditional IT to digital/cloud/agile, tools that abstract the complexity of technology – simplifying previously complex operations and driving technology engagement to ever increasing numbers of consumers and corporate constituents.

The impact on AI and Machine Learning (ML) has been immense, with Python and R becoming gateways to huge machine learning tools that means even complex technical tasks like building a complex neural network can be achieved in less than 10 lines of code, shifting balance of the challenge from high end mathematical and statistical ability to optimisation of the task itself. Getting better data, shifting the make-up of the layers etc…

Which opens up machine learning to practitioners and away from academics.

The real test is now

So what? It is great that I know how ML apps are built because I have done it. I figure this will make me a better analyst, particularly if I want to focus on AI and quantum computing as my core topics of research. However, what I really want to do is use this to help get data and analyse data more effectively.

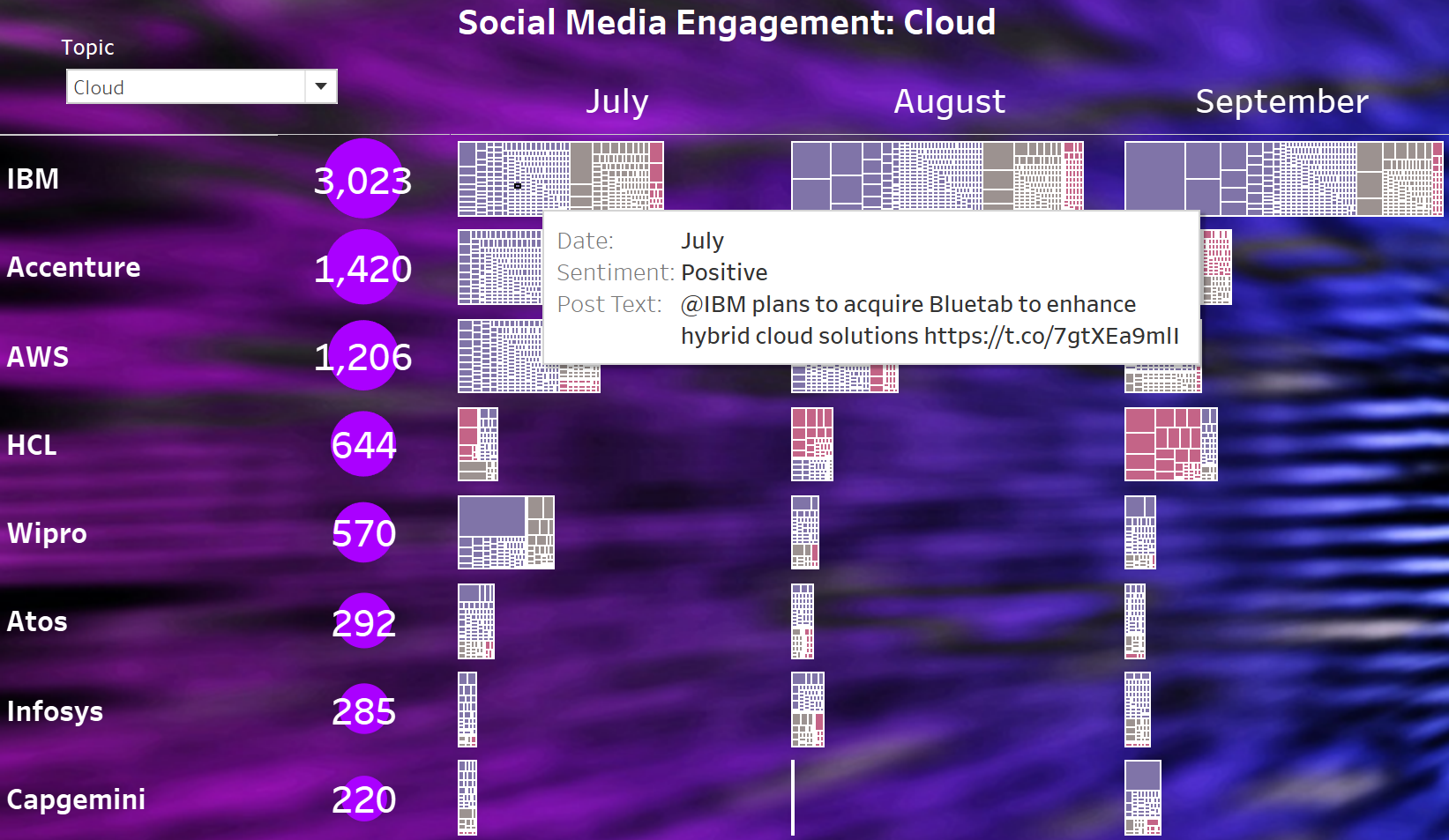

Initially I have been looking at data from social media platforms to see if I can gauge the sentiment of tweets directed at groups of companies and also if I can use social media to get an idea of the technology topics people are interested in, the stage of maturity of some of these discussions and eventually advise on the best way to market technology services, the best ways to approach engagements, what potential customers are looking for, etc…

The current tools on Market Prescience are at stage one of this process. I collect the raw data from social media platforms directly using an API, but I will look at web scraping and other methods to get to platforms without good APIs. Then I use a sentiment analyser to get an idea of whether the post is broadly positive or negative. Currently, I am using an off the shelf sentiment tool that is part of a Python library, the VADER tool. Although this is quite good, you see accuracy of 80-85% quoted, it runs into difficulties with social media posts where sentiments aren’t clear even to a human and the subject of the post or who the post is directed at is not always clear, again even for a human. I am training my own model, but I am in the process of optimising it and it just isn’t as good as VADER yet.

Is it good enough?

This highlights a key challenge for anyone using a ML or AI solution. What is good enough? With projects stalled by the drive to get beyond the elusive 95% accuracy point and overcoming subjectivity issues like the one mentioned above particularly with edge cases and outliers. Personally, I think the technology needs a break. For example take the sentiment analysis we are doing. I am trying to train my model to increase the neutral count for subjective cases, because that is what a human would do – but I am aware that 15-20% of the sentiment analysis is potentially wrong and I could be criticised for not going in and correcting these “mistakes”. Particularly, when they might show that one organization has more negative posts directed at them than another. However, all the organisations are equally affected by these rates of error and this is the nature of learning.

We are taking the view that the sentiment analysis focus is not on an individual bad segmentation, but the aggregate. Which is looking extremely indicative: so far when the tool shows a firm has lots of negative and neutral engagement – it does. And the company should at least examine the reasons behind it. Ultimately this is a tool that provides a real time analysis of 1000s of data points – this gives instant warnings of a potential issues which require further investigation rather than the definitive take.

Watch this space for more on how we are integrating machine learning into the work we are doing.

Please reach out to us if you’d like to know more. We can help your organization look at their social media engagement using our tools and we would be happy to talk you through this.